publications

content in reversed chronological order.

2025

- Nonparametric Additive Value Functions: Interpretable Reinforcement Learning with an Application to Surgical RecoveryPatrick Emedom-Nnamdi, Timothy R. Smith, Jukka-Pekka Onnela, and Junwei LuAnnals of Applied Statistics, 2025

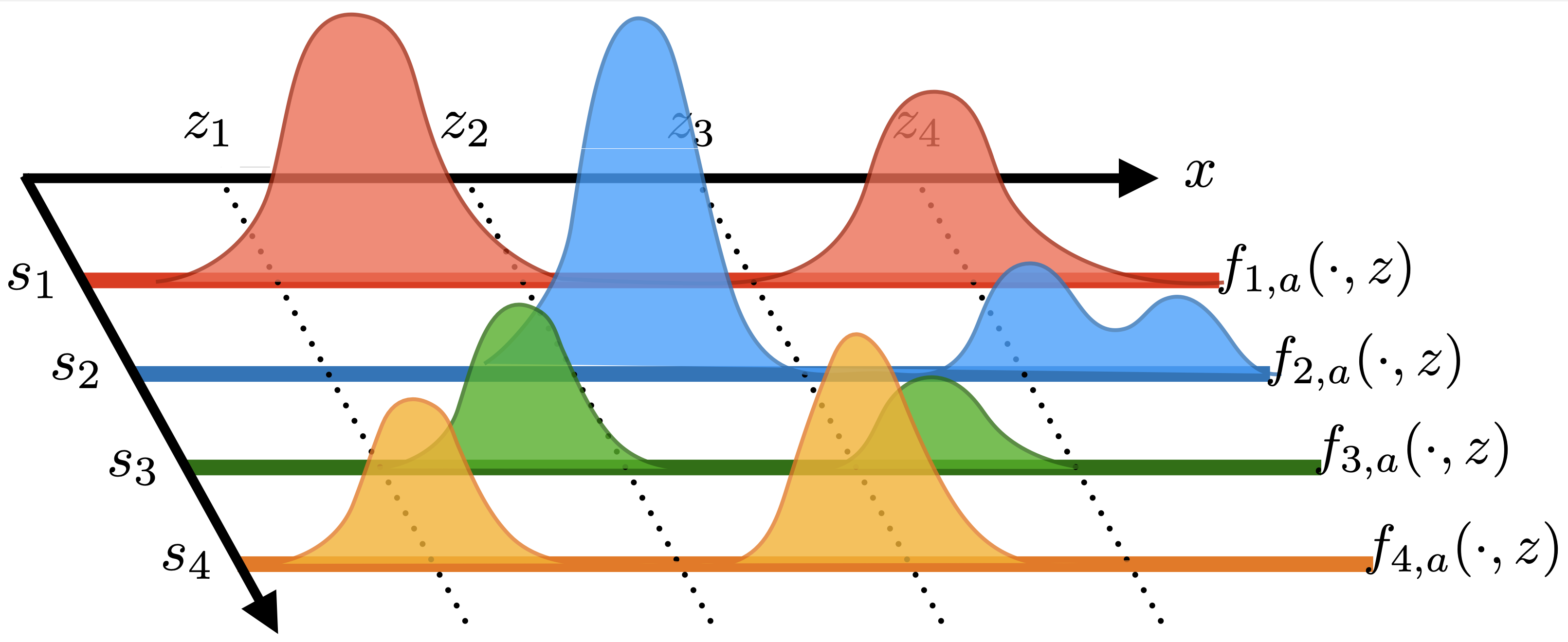

We propose a nonparametric additive model for estimating interpretable value functions in reinforcement learning, with an application in optimizing postoperative recovery through personalized, adaptive recommendations. While reinforcement learning has achieved significant success in various domains, recent methods often rely on black-box approaches, such as neural networks, which hinder the examination of individual feature contributions to a decision-making policy. Our novel method offers a flexible technique for estimating action-value functions without explicit parametric assumptions, overcoming the limitations of the linearity assumption of classical algorithms. By incorporating local kernel regression and basis expansion, we obtain a sparse, additive representation of the action-value function, enabling local approximation and retrieval of nonlinear, independent contributions of select state features and the interactions between joint feature pairs. We validate our approach through a simulation study and apply it to spine disease recovery, uncovering recommendations aligned with clinical knowledge. This method bridges the gap between flexible machine learning techniques and the interpretability required in healthcare applications, paving the way for more personalized interventions.

- Design and feasibility of smartphone-based digital phenotyping for long-term mental health monitoring in adolescentsD. Huang, P. Emedom-Nnamdi, J. P. Onnela, and A. Van MeterPLOS Digital Health, 2025

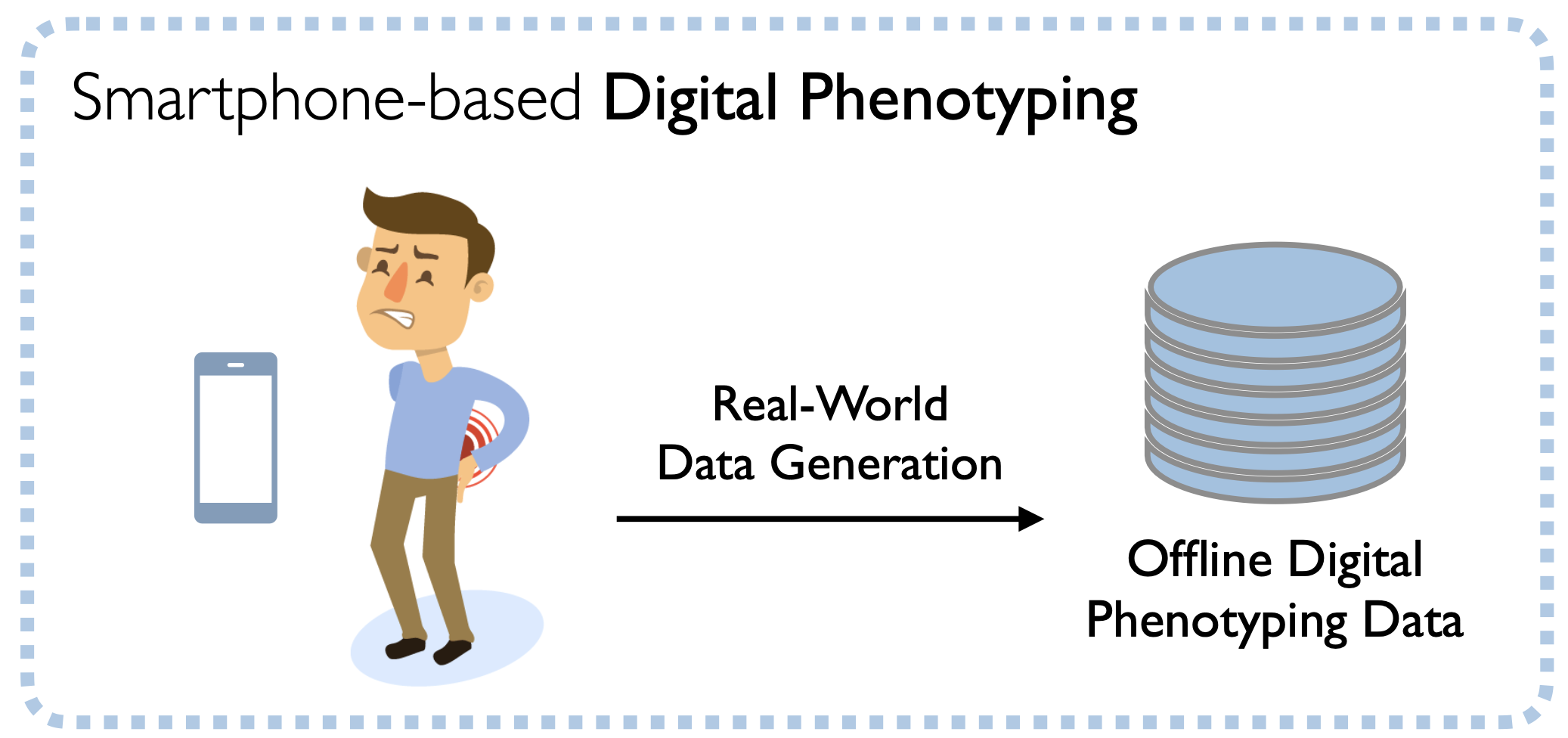

Assessment of psychiatric symptoms relies on subjective self-report, which can be unreliable. Digital phenotyping collects data from smartphones to provide near-continuous behavioral monitoring. It can be used to provide objective information about an individual’s mental state to improve clinical decision-making for both diagnosis and prognostication. The goal of this study was to evaluate the feasibility and acceptability of smartphone-based digital phenotyping for long-term mental health monitoring in adolescents with bipolar disorder and typically developing peers. Participants (aged 14–19) with bipolar disorder (BD) or with no mental health diagnoses were recruited for an 18-month observational study. Participants installed the Beiwe digital phenotyping app on their phones to collect passive data from their smartphone sensors and thrice-weekly surveys. Participants and caregivers were interviewed monthly to assess changes in the participant’s mental health. Analyses focused on 48 participants who had completed participation. Average age at baseline was 15.85 years old (SD = 1.37). Approximately half (54%) identified as female, and 54% identified with a minoritized racial/ethnic background. Completion rates across data types were high, with 99% (826/835) of clinical interviews completed, 89% of passive data collected (22,233/25,029), and 47% (4,945/10,448) of thrice-weekly surveys submitted. The proportion of days passive data were collected was consistent over time for both groups; the clinical interview and active survey completion decreased over the study course. Results of this study suggest digital phenotyping has significant potential as a method of long-term mental health monitoring in adolescents. In contrast to traditional methods, including interview and self-report, it is lower burden and provides more complete data over time. A necessary next step is to determine how well the digital data capture changes in mental health to determine the clinical utility of this approach.

- A Digital Phenotypic Assessment in Neuro-Oncology (DANO): A Pilot Study on Sociability Changes in Patients Undergoing Treatment for Brain MalignanciesF. Siddi, P. Emedom-Nnamdi, M. P. Catalino, A. Rana, A. Boaro, H. Y. Dawood, F. Sala, J.-P. Onnela, and T. R. SmithCancers, 2025

Background: The digital phenotyping tool has great potential for the deep characterization of neurological and quality-of-life assessments in brain tumor patients. Phone communication activities (details on call and text use) can provide insight into the patients’ sociability. Methods: We prospectively collected digital-phenotyping data from six brain tumor patients. The data were collected using the Beiwe application installed on their personal smartphones. We constructed several daily sociability features from phone communication logs, including the number of incoming and outgoing text messages and calls, the length of messages and duration of calls, message reciprocity, the number of communication partners, and number of missed calls. We compared variability in these sociability features against those obtained from a control group, matched for age and sex, selected among patients with a herniated disc. Results: In brain tumor patients, phone-based communication appears to deteriorate with time, as evident in the trend for total outgoing minutes, total outgoing calls, and call out-degree. Conclusions: These measures indicate a possible decrease in sociability over time in brain tumor patients that may correlate with survival. This exploratory analysis suggests that a quantifiable digital sociability phenotype exists and is comparable for patients with different survival outcomes. Overall, assessing neurocognitive function using digital phenotyping appears promising.

- Assessing Mobility in Patients With Glioblastoma Using Digital Phenotyping—Piloting the Digital Assessment in Neuro-OncologyNoah L. A. Nawabi, Patrick Emedom-Nnamdi, John L. Kilgallon, Jakob V. E. Gerstl, David J. Cote, Rohan Jha, Jacob G. Ellen, Krish M. Maniar, Christopher S. Hong, Hassan Y. Dawood, and 2 more authorsNeurosurgery, 2025

BACKGROUND AND OBJECTIVES: Digital phenotyping (DP) enables objective measurements of patient behavior and may be a useful tool in assessments of quality-of-life and functional status in neuro-oncology patients. We aimed to identify trends in mobility among patients with glioblastoma (GBM) using DP. METHODS: A total of 15 patients with GBM enrolled in a DP study were included. The Beiwe application was used to passively collect patient smartphone global positioning system data during the study period. We estimated step count, time spent at home, total distance traveled, and number of places visited in the preoperative, immediate postoperative, and late postoperative periods. Mobility trends for patients with GBM after surgery were calculated by using local regression and were compared with preoperative values and with values derived from a nonoperative spine disease group. RESULTS: One month postoperatively, median values for time spent at home and number of locations visited by patients with GBM decreased by 1.48 h and 2.79 locations, respectively. Two months postoperatively, these values further decreased by 0.38 h and 1.17 locations, respectively. Compared with the nonoperative spine group, values for time spent at home and the number of locations visited by patients with GBM 1 month postoperatively were less than control values by 0.71 h and 2.79 locations, respectively. Two months postoperatively, time spent at home for patients with GBM was higher by 1.21 h and locations visited were less than nonoperative spine group values by 1.17. Immediate postoperative values for distance traveled, maximum distance from home, and radius of gyration for patients with GBM increased by 0.346 km, 2.24 km, and 1.814 km, respectively, compared with preoperative values. CONCLUSIONS: Trends in patients with GBM mobility throughout treatment were quantified through the use of DP in this study. DP has the potential to quantify patient behavior and recovery objectively and with minimal patient burden.

2023

- Knowledge Transfer from Teachers to Learners in Growing-Batch Reinforcement LearningPatrick Emedom-Nnamdi, Abram L. Friesen, Bobak Shahriari, Nando Freitas, and Matt W. HoffmanInternational Conference on Learning Representations (ICLR) – Reincarnating RL Workshop, 2023

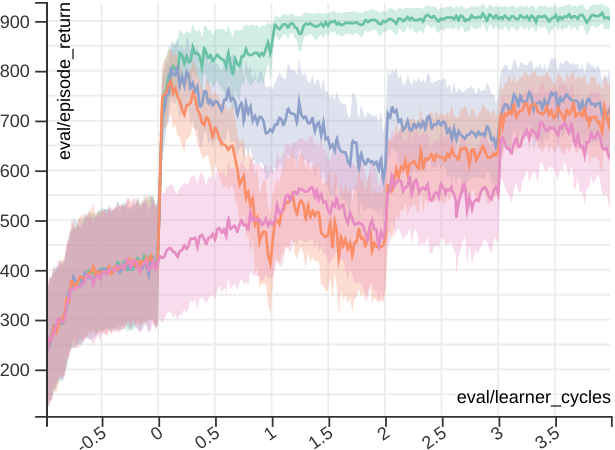

Standard approaches to sequential decision-making exploit an agent’s ability to continually interact with its environment and improve its control policy. However, due to safety, ethical, and practicality constraints, this type of trial-and-error experimentation is often infeasible in many real-world domains such as healthcare and robotics. Instead, control policies in these domains are typically trained offline from previously logged data or in a growing-batch manner. In this setting a fixed policy is deployed to the environment and used to gather an entire batch of new data before being aggregated with past batches and used to update the policy. This improvement cycle can then be repeated multiple times. While a limited number of such cycles is feasible in real-world domains, the quality and diversity of the resulting data are much lower than in the standard continually-interacting approach. However, data collection in these domains is often performed in conjunction with human experts, who are able to label or annotate the collected data. In this paper, we first explore the trade-offs present in this growing-batch setting, and then investigate how information provided by a teacher (i.e., demonstrations, expert actions, and gradient information) can be leveraged at training time to mitigate the sample complexity and coverage requirements for actor-critic methods. We validate our contributions on tasks from the DeepMind Control Suite.

- Interpretable Statistical Learning for Real-World Behavioral DataPatrick Ugochukwu Emedom-NnamdiHarvard University, 2023

The rapid development of data collection methods and analysis techniques has revolutionized our understanding of human behavior and its relationship to health outcomes. However, despite the increasing availability of real-world behavioral data, the effective use of this information for real-time prediction and intervention remains a significant challenge. This dissertation explores interpretable statistical learning methods for real-world behavioral data, with a focus on overcoming limitations in episodic data collection by leveraging smartphone-based digital phenotyping. The approaches explored ultimately provide a scalable method for utilizing real-world history data on human behavior to inform decision-making and interventions, while improving current standards of care. Chapter 1 presents a novel method for estimating interpretable value functions in reinforcement learning. By incorporating local kernel regression and basis expansion, we develop a sparse, additive representation of the action-value function. This allows us to approximate the action-value function and retrieve the nonlinear, independent contributions of select features and joint feature pairs. We validate this approach through a simulation study and an application to spine disease, uncovering recovery recommendations in line with clinical knowledge. Chapter 2 explores the trade-offs of learning in the growing-batch reinforcement learning setting and investigates how information provided by a teacher (i.e., demonstrations, expert actions, and gradient information) can be leveraged during training to mitigate the sample complexity and coverage requirements for actor-critic methods. We validate our contributions on tasks from the DeepMind Control Suite. Chapter 3 introduces an approach where we use hidden semi-Markov models on smartphone activity logs to identify key patterns of differentiation in smartphone usage among adolescents with bipolar disorder and their typically-developing peers. This analysis enables the identification of latent constructs that correspond to resting and active smartphone usage, providing insights into the long-term behavioral trends in adolescents with bipolar disorder. Chapter 4 presents the Digital Assessment in Neuro-Oncology (DANO) pilot, which leverages smartphone-based digital phenotyping to monitor post-operative recovery in glioblastoma patients. We analyze passive GPS and accelerometer data to construct mobility patterns and compare these patterns with a control group of non-operative spine disease patients. Our findings reveal significant changes in mobility among glioblastoma patients during the first six months following surgery and between subsequent cycles of chemotherapy.

- Stasis: Reinforcement Learning Simulators for Human-Centric Real-World EnvironmentsGeorgios Efstathiadis, Patrick Emedom-Nnamdi, Arinbjörn Kolbeinsson, Jukka-Pekka Onnela, and Junwei LuICLR 2023 – Workshop on Trustworthy Machine Learning for Healthcare, 2023

We present on-going work toward building Stasis, a suite of reinforcement learning (RL) environments that aim to maintain realism for human-centric agents operating in real-world settings. Through representation learning and alignment with real-world offline data, Stasis allows for the evaluation of RL algorithms in offline environments with adjustable characteristics, such as observability, heterogeneity and levels of missing data. We aim to introduce environments the encourage training RL agents that are capable of maintaining a level of performance and robustness comparable to agents trained in real-world online environments, while avoiding the high cost and risks associated with making mistakes during online training. We provide examples of two environments that will be part of Stasis and discuss its implications for the deployment of RL-based systems in sensitive and high-risk areas of application.

2017

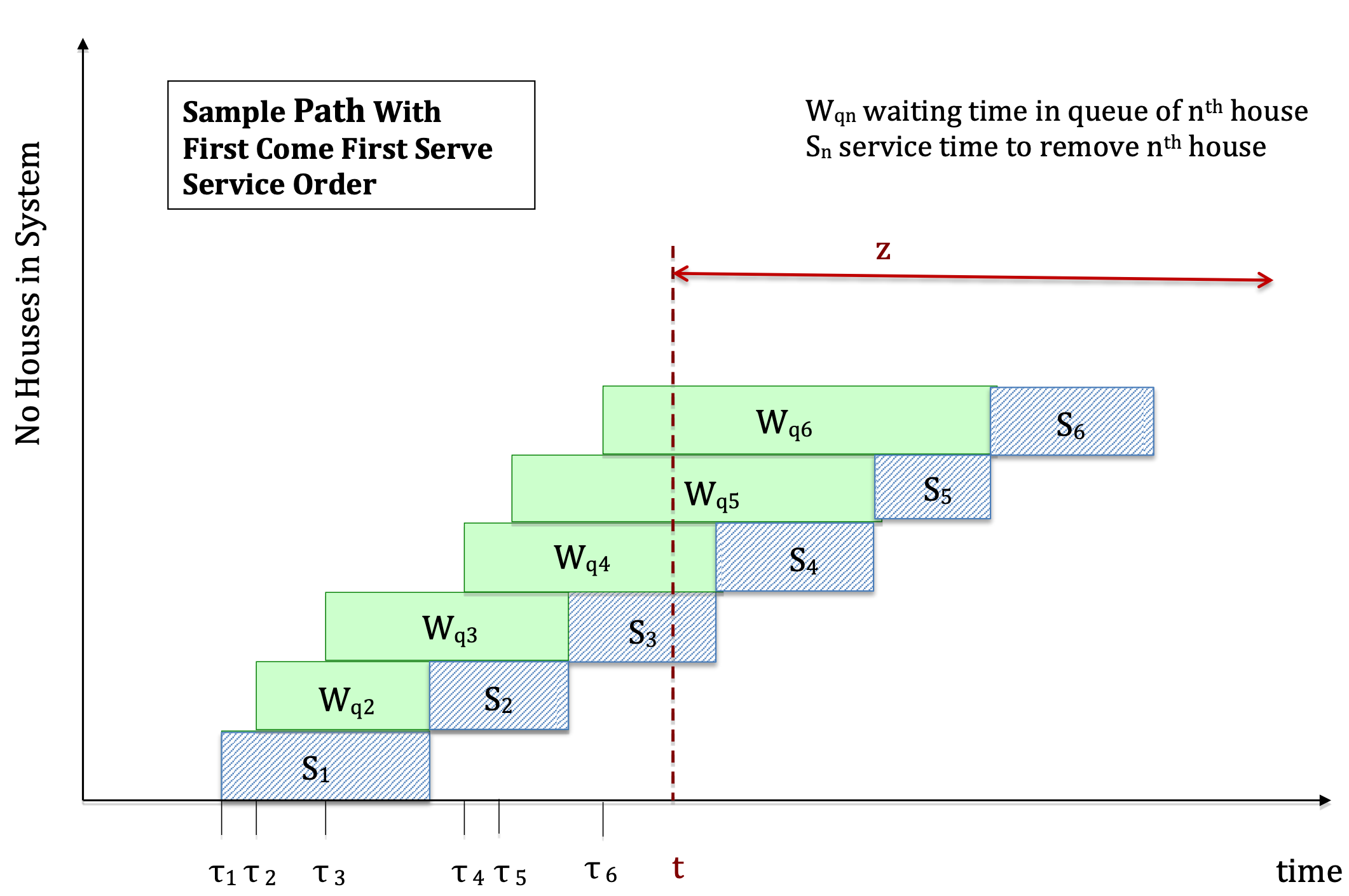

- Queueing Analysis of a Chagas Disease Control CampaignMaria T. Rieders, Patrick Emedom-Nnamdi, and Michael Z. Levy2017

A critical component of preventing the spread of vector borne diseases such as Chagas disease are door-to-door campaigns by public health officials that implement insecticide application in order to eradicate the vector infestation of households. The success of such campaigns depends on adequate household participation during the active phase as well as on sufficient follow-up during the surveillance phase when newly infested houses or infested houses that had not participated in the active phase will receive treatment. Queueing models which are widely used in operations management give us a mathematical representation of the operational efforts needed to contain the spread of infestation. By modeling the queue as consisting of all infested houses in a given locality, we capture the dynamics of the insect population due to prevalence of infestation and to the additional growth of infestation by redispersion, i.e. by the spread of infestation to previously uninfested houses during the wait time for treatment. In contrast to traditional queueing models, houses waiting for treatment are not known but must be identified through a search process by public health workers. Thus, both the arrival rate of houses to the queue as well as the removal rate from the queue depend on the current level of infestation. We incorporate these dependencies through a load dependent queueing model which allows us to estimate the long run average rate of removing houses from the queue and therefore the cost associated with a given surveillance program. The model is motivated by and applied to an ongoing Chagas disease control campaign in Arequipa, Peru.